5 min to read

What Do Neural Networks dream of ?

Deep Dream

To start off let me first give you an idea of what exactly is DeepDream? Simply put it is a fun algorithm to generate psychedelic-looking images.

Okay, so Google as we all know has been leading the game of image recognition and classification with its state-of-the-art projects/papers since forever. When creating these image recognition/classification tools the super-brainers at Google came up with a technique to help them understand and visualize how excatly neural networks are able to carry out different classification tasks so as to make any improvements in the neural network if needed and to check what exactly the neural network has learned over the course of training. But who knew this would turn out to be a fun artistic project where you could generate a remix of a number of visual concepts.

Normally we train a classification model by feeding it a number of training examples and then gradually adjusting the network parameters until the model learns to classify the examples correctly. Each training example is fed into the input layer which passes it to other layer which in turn does some computations (weight-adjustments at the nodes) and then this goes on to the next layer with some more computations (some more weight adjustments) until the output layer is reached which finally tells you what the object is in the training example.

Now lets turn this neural network upside-down and give it an image and tell it to draw out an interpretation of anything that it could see. While it does that lets also ask it to enhance the weights of the nodes in the present layer so as to closely resemble its interpretation and then later feed this as an input coupled with the original input image to the next layer in the neural nets. This goes on for some time and until its interpretation starts taking a form in the original input image and voila your input image has changed altogether into something the neural network thought it saw in the image.

Easier said than done. When you tell a machine to interpret anything it could see, it doesn’t really know what to look for and what not to, it just sees a number of features but to actually get a sense of what any particular layer has learned, we need to maximize the activations through that layer. The gradients of that layer are set equal to the activations from that layer, and then gradient ascent is done on the initial input image, thereby, maximizing the activations of that layer and we say okay may be the neural nets observed some squares or strokes of lines in that image.

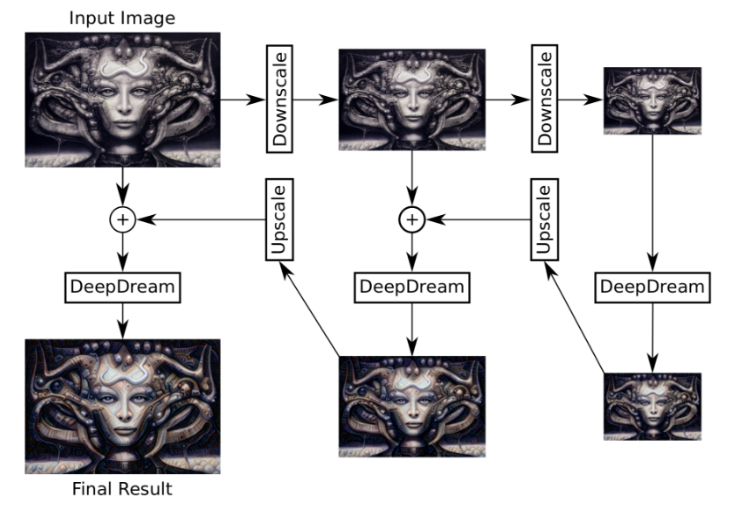

This however isn’t enough to produce a cool and distinguishable figure in the images. On top of all this interpretation and weight adjustment in gradient descent process, a number of image smoothing techniques like Gaussian blurring, repeated down-scaling, down-scaling while applying gradient descent and merging all such combinations into the final output image of the layer, etc etc are also applied.

Now when you take hundreds of such transformed images generated from the initial input image, put them as frames and join them using some codec into a video, you get yourself a beautiful psychedelic transformation of an image that looks more like a dream. Hence the name Deep Dream.

If you followed till here, you’d surely be thinking okay but where do I get that trained classifier model that Google trained on a bunch of images to use in my neural network model for creating cooler deep-dreams? Well, Google has many such models each with its own advantages and disadvantages. I used the Google’s “Inception model 5h for tensorflow”. This variant of Inception models is easier to use for deep-dream and other imaging techniques as it allows the input images to be of any size and the optimized images are more smoother comparatively.

Although it is unclear what all images the Inception model was initially trained on because Google developers have (as usual) forgotten to document it. Following is from what I could observe and make out of training the neural nets step by step at different layers,

layer 1: mostly wavy lines

layer 2: a lot line-strokes

layer 3: boxes

layer 4: circles

layer 5: eyes, lots of creepy eyes

layer 6: dogs, cats, bears, foxes

layer 7: faces, buildings

layer 8: fishes, frogs, reptilian eyes

layer 10: monkeys, lizards, snakes, ducks and

by layer 11 it was all bizarre and horrifying to express in words.

Here are a few attempts of generating a deep-dream visualizations on different images:

Link to actual Google’s actual white paper Check out the actual GitHub repo.

If you liked this project, please consider buying me a coffee

Comments